- 10 Posts

- 16 Comments

4·1 year ago

4·1 year agoWe should stop calling these titles confusing and call them what they are, plain wrong. This is the title of the original article. People who cannot write grammatically correct titles are writing entire articles.

141·1 year ago

141·1 year agoI don’t realistically expect such ban to happen. I started banning everyone who posts about Musk instead, my feed got a lot cleaner.

26·1 year ago

26·1 year agoPointing out won’t do, we need moderation.

2·1 year ago

2·1 year agoThanks for not putting the paper behind a paywall!

6·1 year ago

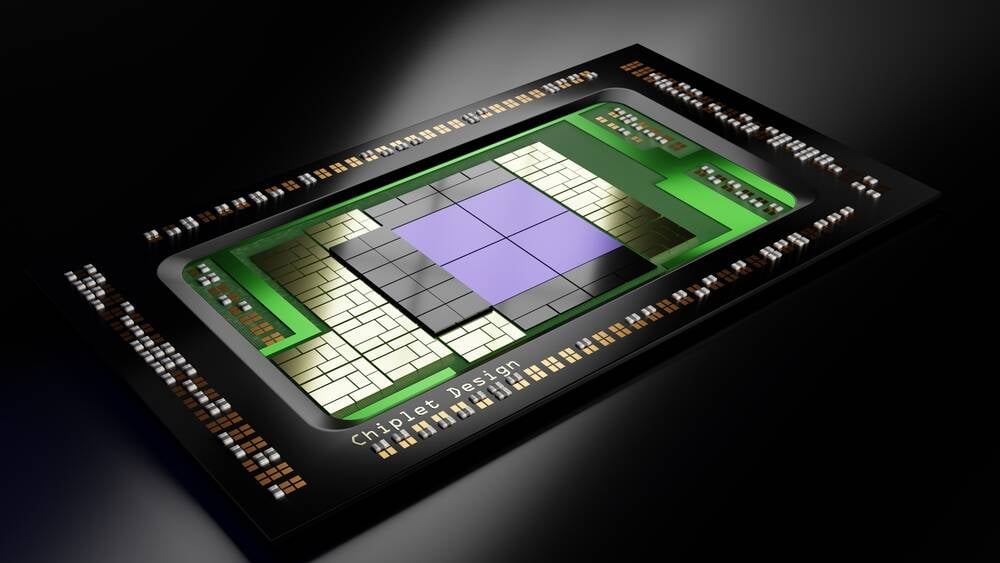

6·1 year agoIn this article RTL refers to register transfer level. It is a way of describing hardware on very low level, it uses registers for memory (which usually translates to flip-flops when/if synthesized), wires, basic arithmetic and logic operations, but terminology may slightly change based on which rtl language is being used. It can be used to design a CPU, or any ASIC (application specific integrated circuit) chip. Instructions may resemble to processor instructions, but the end result is fundamentally different. You may run a set of instructions on a processor, while what rtl describes is often synthesized and becomes the hardware itself which performs the operations (e.g. arithmetic logic unit in the cpu).

1·1 year ago

1·1 year agoThat could actually be useful (IBD gang)

15·1 year ago

15·1 year agoCovid advice was simple, people understood it but many didn’t comply because they didn’t find it convenient. There were also covid-deniers, and people who significantly underestimated it. There were people who found corporate cyber security measures inconvenient too in the places I worked, but ignorance was I think always the more important reason.

I also think it isn’t enough for the advice to be simple, it should be somewhat easy to apply. “Don’t fall into phishing emails”. Sure, but how? Then it lists a bunch of tricks and hints and people can rarely remember all, and apply while they go through tens of emails daily. I think this is the message from the article.

73·1 year ago

73·1 year agoI agree that AI can decimate workforce. My point is, other tools did that already and this is not unique to AI. Imagine electronic chip design. Transistor was invented in 40s and it was a giant tube. Today we have chips with billions of transistors. Initially people were designing circuits on transistor level, then register transfer level languages got invented and added a layer of abstraction. Today we even have high level synthesis languages which converts C to a gatelist. And consider the backend, this gate list is routed into physical transistors in a way that timing is met, clocks are distributed in balance, signal and power integrity are preserved, heat is removed etc. Considering there are billions of transistors and no single unique way of connecting them, tool gets creative and comes with a solution among virtually infinite possibilities which satisfy your specification. You have to tell the tool what you need, and give some guidance occasionally, but what it does is incredible, creative, and wouldn’t be possible if you gathered all engineers in the world and make them focus on a single complex chip without tools’ help. So they have been taking engineers’ jobs for decades, but what happened so far is that industry grew together with automation. If we reach the limits of demand, or physical limitations of technology, or people cannot adapt to the development of the tools fast enough by updating their job description and skillset, then decimation of the workforce happens. But this isn’t unique to AI.

I am not against regulating AI, I am just saying what I think will happen. Offloading all work to AI and getting UBI would be nice, but I don’t see that happening in near future.

429·1 year ago

429·1 year agoUsing automation tools isn’t something new in engineering. One can claim that as long as a person is involved and guiding/manipulating the tool, it can be copyrighted. I am sure laws will catch up as usage of AI becomes mainstream in the industry.

26·1 year ago

26·1 year agoAccording to the article grammatical errors are not the reason. The reason is that AI uses simpler vocabulary to mimic a regular conversation of average people.

5·1 year ago

5·1 year agoMaybe accuracy could be a selling point but it isnt mentioned in the linked article (maybe mentioned in their paper?). Fingerstick-free methods which measure it from surface have relatively lower accuracy. Also what is measures is not the blood but interstitial glucose level so it is delayed. But the correlation and delay of saliva glucose levels against blood glucose levels is also not mentioned. I hope this research can pave the way for something beneficial eventually. Edit: I tried to dig down the original paper but it is paywalled.

2·1 year ago

2·1 year agoI find it a bit tangential but I see.

312·1 year ago

312·1 year agoWrong community

3·1 year ago

3·1 year agoModel does not keep track of where it learns it from. Even if it did, it couldn’t separate what it learnt and discard. Learning of AI resembles to improving your motor skills more than filling an excell sheet. You can discard any row from an Excell sheet. Can you forget, or even separate/distinguish/filter the motor skills you learnt during 4th grade art classes?

2·1 year ago

2·1 year agoI do not want to see ads in my feed too but I found this interesting, also semiconductor tech is very industry-driven, so most news can be interpreted as an ad. Why do you think Cadence has more design maturity? Cadence is usually preferred or analog and mixed signal, but for RTL design-verification part whatever I need and have in Cadence, I find it in Synopsys as well.

I don’t think this will work well and others already explained why, but thanks for using this community to pitch your idea. We should have more of these discussions here rather than CEO news and tech gossip.